Table of Contents

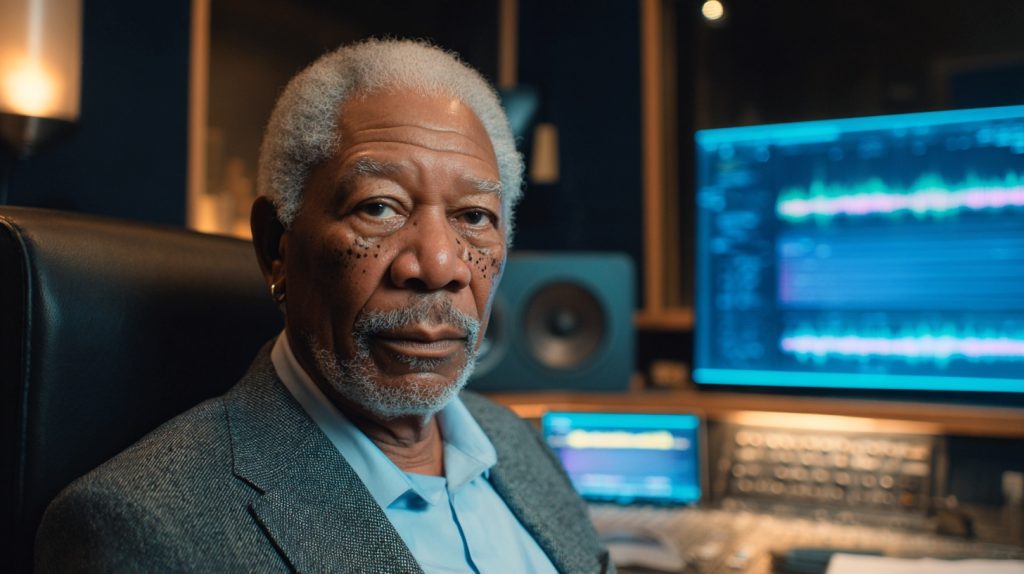

Morgan Freeman AI Voice clones are at the center of a brewing legal and cultural showdown. The 88 year old Oscar winner is pursuing legal action after discovering a wave of unauthorized AI replicas of his voice used in videos, apps and adverts without his consent or payment.

The actor has described these Morgan Freeman AI Voice imitations as a form of theft, and says his lawyers are “very, very busy” tracking down offenders, according to coverage in the New York Daily News that drew on his interviews with The Guardian and The Independent link.

What begins as a dispute over one famous voice quickly turns into a broader test case: when artificial intelligence can copy your tone, rhythm and authority in minutes, who controls what “you” say in the public square.

1. Morgan Freeman AI Voice Case Tests Ownership Of Identity

The first impact of the Morgan Freeman AI Voice battle is on the basic question of ownership. For decades, performers have fought over how their faces, names and images appear in ads, posters and digital cameos. Voice has always been part of that, but AI turns it into pure software.

Freeman’s legal move sits where several areas collide:

- Right of publicity: Whether a person’s unique voice can be turned into a synthetic product without permission.

- Copyright in recordings: How far protection extends once AI is trained on existing performances.

- Contract terms: How older deals, signed before modern AI, should be read when studios claim broad rights.

With current tools, a cloner does not need Freeman in the booth. A few minutes of clean audio is enough to train a Morgan Freeman AI Voice model that can:

- Record fake endorsements.

- Narrate scripts he never approved.

- Insert his voice into political or commercial content that conflicts with his values.

If a court finds this is a clear violation of enforceable rights, it strengthens the case for anyone whose identity is being treated as a free input to someone else’s AI product.

2. Morgan Freeman AI Voice Fight Becomes A Labor And Union Precedent

The second big shift concerns labor. Freeman has also criticized the broader trend of AI generated performers, including the synthetic “actress” Tilly Norwood, which unions such as SAG AFTRA view as a direct threat to working actors.

From a worker’s perspective, Morgan Freeman AI Voice cloning is not a clever tech demo. It is a tool for:

- Replacing professional voice actors with cheaper AI.

- Narrowing the number of paid roles in film, television and advertising.

- Weakening bargaining power by making any individual performer feel replaceable.

The Hollywood strikes over AI in 2023 and 2024 were an early battle line. Actors pushed for protections against unconsented digital doubles, clear pay for any AI reuse and transparency about how models are built.

A strong outcome in the Morgan Freeman AI Voice case can:

- Give unions a concrete legal example to point to in negotiations.

- Discourage studios from forcing “scan once, own forever” contracts on younger talent.

- Encourage collective standards for consent and royalties around AI voice cloning.

Even if Freeman settles privately, the mere existence of his legal campaign signals that the world’s most recognizable narrators will not quietly accept being turned into permanent training data.

3. Morgan Freeman AI Voice Lawsuit Forces Courts To Grapple With Consent And Deepfakes

The third way this fight could change the rules is by dragging AI deepfake audio into real legal doctrine, instead of staying in the realm of conference panel anxiety.

Consent is the core issue. For each use of a Morgan Freeman AI Voice clone, courts will need to consider:

- Did Freeman meaningfully agree to this specific use or category of uses.

- Were any previous contracts clear enough to cover AI cloning, or are studios overreaching.

- Is there deception involved when AI audio is presented as real speech.

Deepfake audio in politics and civic life is a growing threat. A fake phone call of a candidate telling people not to vote, or a fabricated audio confession of a journalist, can spread faster than any correction. A Morgan Freeman AI Voice clip endorsing an extremist could do real damage before fact checkers catch up.

By recognizing unauthorized AI voice replicas as a violation of identity and possibly as a deceptive practice, courts can:

- Justify stronger penalties for harmful deepfake audio.

- Push platforms to treat flagged synthetic speech more seriously.

- Give regulators a legal backbone for new AI specific laws.

In other words, this lawsuit can help move democracies from hand wringing to enforceable standards.

4. Morgan Freeman AI Voice Clash Connects To AI Anchors And Synthetic News

The fourth impact is cultural and institutional. The Morgan Freeman AI Voice controversy is unfolding at the same time media organizations and startups are rolling out fully synthetic presenters.

Businesstech has already looked at Aisha Gaban, an AI generated news presenter built to front daily bulletins without a human in the studio, in our report on AI news presenter Aisha Gaban. That project raises its own questions about accountability and bias. Who is responsible when an AI anchor “says” something inflammatory. Whose style, accent and emotional cues were scraped to make her persuasive.

Freeman’s case sits upstream from that trend. Morgan Freeman AI Voice tools and similar celebrity clones are part of the raw material that can make synthetic anchors feel trustworthy and familiar.

If legal systems fail to protect an 88 year old legend with global name recognition, the message to freelance narrators, local journalists and non English performers is bleak. Their speech patterns can be vacuumed up and repackaged into AI products with even less scrutiny.

A stronger line around Morgan Freeman AI Voice rights would support a broader principle. AI can assist newsrooms and storytellers, but it should not silently cannibalize the labor and identity of the very people audiences trust.

5. Morgan Freeman AI Voice Outcome Could Shape Global AI Norms

The fifth way this lawsuit matters is global. It would be a mistake to treat Morgan Freeman AI Voice disputes as a Hollywood curiosity. Voice cloning systems already operate across languages and are being built into customer service, education and politics.

In countries with weaker institutions, synthetic audio can be a tool for repression and chaos. A fabricated recording of an activist planning violence, a fake call of a judge taking bribes, or a “leak” of an opposition leader insulting voters can be enough to justify arrests or smear campaigns.

If courts in a major jurisdiction treat Morgan Freeman AI Voice cloning without consent as a serious, compensable harm, that has ripple effects beyond the United States. It can:

- Influence cross border data and content negotiations.

- Inform platform policies around deepfake detection and labeling.

- Encourage other governments to adopt similar rights based standards.

A fair outcome could also encourage a more balanced AI voice ecosystem, where:

- Performers explicitly opt in to cloning.

- Contracts limit contexts such as political advertising.

- Transparent royalty systems pay people when AI versions of their work are used.

- Clear audit trails and watermarks help the public tell real from fake.

The technology will stay. The question Freeman is pressing is whether it will be governed by public rules that respect human beings or by private terms of service written around them.