Table of Contents

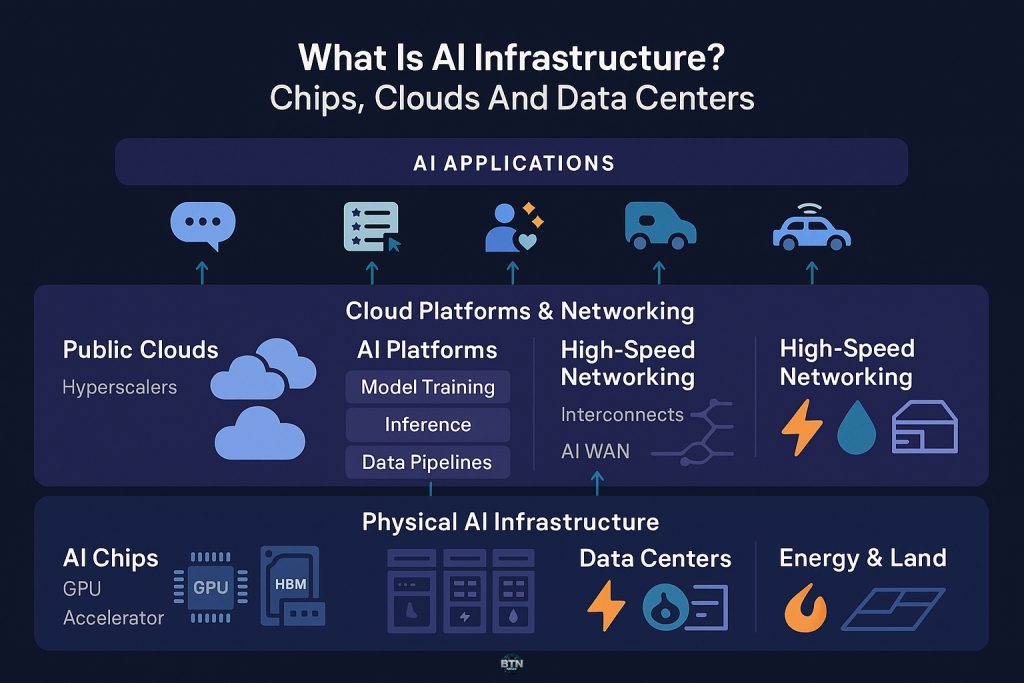

What is AI infrastructure? The question matters more now than at any point in computing history. Every time you ask ChatGPT a question, stream a recommendation on Netflix, or watch your phone autocorrect a text message, you are tapping into a vast, invisible architecture of silicon, electricity, and software. That architecture is AI infrastructure, and it is being rebuilt from the ground up to handle workloads that did not exist five years ago.

Understanding what is AI infrastructure means looking past the hype and into the machinery. It is not just about faster computers. It is about specialized chips designed to crunch trillions of calculations per second, data centers consuming as much power as small cities, and cloud platforms that let a startup in Bangalore train a language model without owning a single server. The stakes are enormous. Governments are pouring hundreds of billions into domestic chip production. Hyperscalers like Microsoft and Google are racing to build what they call AI factories. And the companies that control this infrastructure are shaping who gets to build the future.

This is not a story about technology for technology’s sake. What is AI infrastructure touches questions of power, access, and democracy. When a handful of firms control the chips that make AI possible, they control the on‑ramp to the next industrial revolution. When data centers require gigawatts of electricity and sophisticated cooling systems, they raise hard questions about energy use, environmental impact, and whether the benefits of AI will be distributed fairly or concentrated in the hands of a few. This guide walks through the three pillars of AI infrastructure: chips, clouds, and data centers, and explains why each one matters.

What Is AI Infrastructure: The Role of Specialized Chips

The first pillar of what is AI infrastructure is the chip. Not just any chip, but processors purpose‑built for the kind of math that makes modern AI work. Traditional central processing units (CPUs) were designed to handle a wide variety of tasks sequentially. They are generalists. AI, by contrast, thrives on parallelism. Training a large language model means performing billions of matrix multiplications simultaneously, and that is where graphics processing units (GPUs) and custom accelerators come in.

NVIDIA dominates this space. The company holds roughly 86% of the AI GPU market as of 2025, and its H100 and newer Blackwell chips have become the de facto standard for training frontier AI models. Each H100 can cost between $25,000 and $40,000, and hyperscalers are buying them by the tens of thousands. Microsoft’s new Fairwater data center in Wisconsin, for example, houses hundreds of thousands of NVIDIA GPUs in a single interconnected cluster, creating what the company calls the world’s most powerful AI supercomputer.

But NVIDIA is not the only player. AMD is making a serious push with its MI300 series, which offers 192GB of high‑bandwidth memory compared to the H100’s 80GB. That extra memory matters when you are training models with hundreds of billions of parameters. Intel, meanwhile, is betting on affordability with its Gaudi chips, positioning them as a cost‑effective alternative for enterprises that cannot justify NVIDIA’s premium pricing. And then there are the hyperscalers themselves. Google has its Tensor Processing Units (TPUs), Amazon has Trainium and Inferentia, and even Tesla is building its own Dojo chips to train self‑driving models. The message is clear: if you rely on someone else’s chips, you are at their mercy.

Understanding what is AI infrastructure means recognizing that chips are not commodities. They are designed with specific architectures, memory hierarchies, and interconnects that determine what kinds of AI workloads they can handle efficiently. High‑bandwidth memory (HBM), for instance, has become a bottleneck. Demand for HBM chips is projected to grow 50% year‑over‑year as AI models get larger and more memory‑intensive. The companies that can secure access to cutting‑edge chips and the foundries that produce them, primarily Taiwan’s TSMC, have a structural advantage in the AI race.

What Is AI Infrastructure: Cloud Platforms as the Delivery Mechanism

The second pillar of what is AI infrastructure is the cloud. Chips are useless if you cannot access them, and most organizations do not have the capital or expertise to build their own AI data centers. That is where cloud platforms come in. Amazon Web Services, Microsoft Azure, and Google Cloud have become the primary way companies and researchers access AI compute at scale.

Cloud providers offer more than just raw processing power. They bundle chips with software frameworks, pre‑trained models, and managed services that lower the barrier to entry. If you want to fine‑tune a language model, you do not need to understand the intricacies of GPU memory management or distributed training. You rent a cluster, upload your data, and let the platform handle the rest. This abstraction is powerful, but it also creates dependency. When Microsoft invested 10 billion dollars in OpenAI, it was not just a financial bet. It was a strategic move to lock OpenAI’s workloads into Azure, ensuring that every ChatGPT query runs on Microsoft infrastructure.

The cloud model also introduces new economics. Training a large AI model can cost millions of dollars in compute time. Inference, the process of running a trained model to generate predictions or responses, is cheaper per query but adds up fast at scale. Cloud providers are now competing on inference efficiency, rolling out custom chips designed to handle these workloads more cost‑effectively. Amazon’s Inferentia chips, for example, are designed to cut AI inference costs by more than 50% compared to traditional GPU‑based instances. For a company running millions of AI queries per day, that difference is existential.

What is AI infrastructure in the cloud context also means understanding the geopolitics of access. US export controls restrict the sale of advanced AI chips to China, which has forced Chinese companies to rely on older hardware or develop domestic alternatives. Cloud providers operating in multiple jurisdictions have to navigate a patchwork of regulations, and the result is a fragmented global AI infrastructure where access to cutting‑edge compute is unevenly distributed. That fragmentation has consequences. It shapes which countries can develop frontier AI models, which startups can compete, and which populations benefit from AI‑driven services.

What Is AI Infrastructure: Data Centers as the Physical Foundation

The third pillar of what is AI infrastructure is the data center. This is where the chips live, where the power flows, and where the cooling systems work overtime to keep everything from melting. AI data centers are not like traditional data centers. They are purpose‑built facilities designed to handle power densities and thermal loads that would have been unthinkable a decade ago.

A traditional data center rack might draw 5 to 15 kilowatts of power. An AI rack packed with NVIDIA H100 GPUs can draw over 100 kilowatts, and next‑generation systems are expected to exceed 1,000 kilowatts per rack. That kind of power density creates serious engineering challenges. Electrical systems have to be redesigned to handle the load. Cooling systems have to evolve from air‑based to liquid‑based solutions. And the facilities themselves have to be located near reliable power sources, which is why you see AI data centers being built near hydroelectric dams, nuclear plants, and renewable energy farms.

Microsoft’s Fairwater data center in Wisconsin is a case study in what is AI infrastructure at scale. The facility covers hundreds of acres and required tens of millions of pounds of structural steel and deep foundation piles to build. It uses a closed‑loop liquid cooling system that recirculates water continuously, eliminating the need for constant water replenishment. The cooling fins on the sides of the building are cooled by enormous industrial fans, and the system is designed to handle peak loads without evaporation losses. A large majority of the facility uses this zero‑operational‑water cooling approach, a significant improvement over older designs that consumed millions of gallons per year.

The scale of investment is staggering. Hyperscalers are expected to spend close to a trillion dollars on data center infrastructure by 2030, driven almost entirely by AI demand. In 2024 alone, Alphabet, Microsoft, Amazon, and Meta invested close to 200 billion dollars in capital expenditures, and that figure is expected to climb sharply in 2025. The money is going into servers, networking equipment, electrical systems, and cooling infrastructure. Companies that provide power distribution, backup generators, and thermal management are seeing record demand as AI infrastructure ramps up.

What is AI infrastructure in the data center context also means understanding the supply chain. NVIDIA does not manufacture its own chips. It relies on TSMC, which is ramping up production to meet demand. Contract manufacturers assemble the servers that house those chips. Networking vendors provide the switches and routers that connect them. And all of this has to be coordinated, shipped, and installed in facilities that are being built as fast as construction crews can work. When a single component is delayed, entire projects stall. When geopolitical tensions disrupt chip supply, the ripple effects are felt across the entire AI ecosystem.

If you want a concrete example of how AI infrastructure is reshaping the physical world, look at our coverage of the BlackRock‑aligned data center acquisition and how private capital is snapping up the properties that will underpin this new compute economy: BlackRock‑aligned data centers acquisition. That story illustrates how financialization meets infrastructure in ways that will matter for decades.

What Is AI Infrastructure: The Interconnect and Networking Layer

One often overlooked aspect of what is AI infrastructure is the networking layer that ties everything together. AI training workloads require massive amounts of data to move between GPUs, storage systems, and compute nodes. If the network cannot keep up, the GPUs sit idle, and expensive hardware goes to waste. That is why companies like NVIDIA have invested heavily in interconnect technologies like NVLink, which allows GPUs to communicate at extremely high bandwidth.

Microsoft’s Fairwater data center uses a two‑story rack configuration specifically to reduce latency. By stacking racks vertically and connecting them both horizontally and vertically, the facility minimizes the physical distance data has to travel. This kind of architectural innovation is critical when you are trying to train models with hundreds of billions of parameters across tens of thousands of GPUs. Every millisecond of latency adds up, and at scale, those delays can mean the difference between a model that trains in weeks versus months.

The networking challenge extends beyond individual data centers. Hyperscalers are now building AI‑optimized wide area networks that connect multiple data centers into a single distributed supercomputer. This allows them to pool resources across geographies, improve resilience, and scale more flexibly. But it also introduces new complexities. Data has to be synchronized across sites, workloads have to be orchestrated, and security has to be maintained across a distributed infrastructure. The companies that can solve these problems will have a structural advantage in the AI era.

What Is AI Infrastructure: The Environmental and Energy Costs

Understanding what is AI infrastructure also means reckoning with its environmental footprint. AI data centers are energy‑intensive by design. Training a single large language model can consume as much electricity as hundreds of homes use in a year. Inference workloads, while less intensive per query, add up fast when you are serving millions of users. And as AI becomes more embedded in everyday applications, the energy demands will only grow.

The industry is responding, but the solutions are not simple. Liquid cooling systems reduce water waste but require significant upfront investment. Renewable energy contracts help offset carbon emissions, but they do not eliminate the underlying demand for power. And as data centers cluster in regions with cheap electricity, they can strain local grids and drive up costs for other users. These are not hypothetical concerns. They are playing out in real time in places where data center growth has sparked debates about grid capacity and energy equity.

From a progressive perspective, the question is not whether AI infrastructure should exist. It is who benefits from it and who bears the costs. If AI‑driven productivity gains accrue primarily to a handful of tech giants while the environmental and social costs are distributed across communities, that is a problem. If access to cutting‑edge AI infrastructure is limited to well‑funded startups and large corporations, that entrenches existing inequalities. The infrastructure choices being made today will shape the distribution of AI’s benefits and burdens for decades to come.

What Is AI Infrastructure: The Competitive Landscape and Market Dynamics

The final piece of understanding what is AI infrastructure is recognizing the competitive dynamics at play. NVIDIA’s dominance is real, but it is not guaranteed. AMD is gaining traction with its MI300 series, and Intel is positioning its Gaudi chips as a cost‑effective alternative. Hyperscalers are investing billions in custom silicon to reduce their dependence on NVIDIA. And startups working on novel architectures are challenging the GPU‑centric paradigm from the edges.

The AI chip market is projected to grow from tens of billions of dollars today to well over a hundred billion within a few years, with compound annual growth rates north of 40 percent. That kind of growth attracts competition, and it also attracts regulatory scrutiny. Export controls on advanced chips are reshaping global supply chains. China is investing heavily in domestic chip production to reduce its reliance on foreign suppliers. And Europe is trying to build its own AI infrastructure to avoid dependence on US and Chinese platforms.

For businesses and policymakers, the lesson is clear. AI infrastructure is not a neutral substrate. It is a site of geopolitical competition, economic power, and democratic contestation. The companies and countries that control the chips, clouds, and data centers will have outsized influence over the AI‑driven future. And the rules that govern access to that infrastructure will determine whether AI becomes a tool for broad‑based prosperity or a mechanism for concentrating wealth and power.

Where AI Infrastructure Really Sits in the System Now

Put these pieces together and a clearer picture of what is AI infrastructure emerges. It is not just about faster chips or bigger data centers. It is about a tightly integrated stack of hardware, software, and services that enables AI at scale. It is about supply chains that span continents, energy systems that power entire cities, and business models that lock customers into ecosystems. And it is about choices, made by engineers, executives, and policymakers, that will shape the trajectory of AI for decades.

For progressives who care about democratic institutions and equitable outcomes, the infrastructure layer is where the fight happens. It is where questions of access, cost, and control get decided. It is where the environmental and social costs of AI become visible. And it is where the potential for public investment, open standards, and accountable governance can make a real difference.

The technology is only half the story. The other half is what courts, legislatures, regulators, and citizens decide to do with it. That is where AI infrastructure will finally find its place in the system, and where the rest of us will live with the consequences. Understanding what is AI infrastructure is the first step toward shaping that outcome democratically.