Table of Contents

Human AI teams vs fully autonomous AI has become a practical question for leaders, not a sci fi debate. Budgets, headcount plans, and union contracts are already being written around it. In real organizations, the decision shows up in small, specific ways: Do you give call center agents an AI copilot, or do you roll out a fully automated support bot that talks to customers on its own. Do you help analysts write better reports, or replace them with autonomous agents that generate dashboards overnight.

That choice is not only about efficiency. It is about power, accountability, and the kinds of institutions we want to build around artificial intelligence.

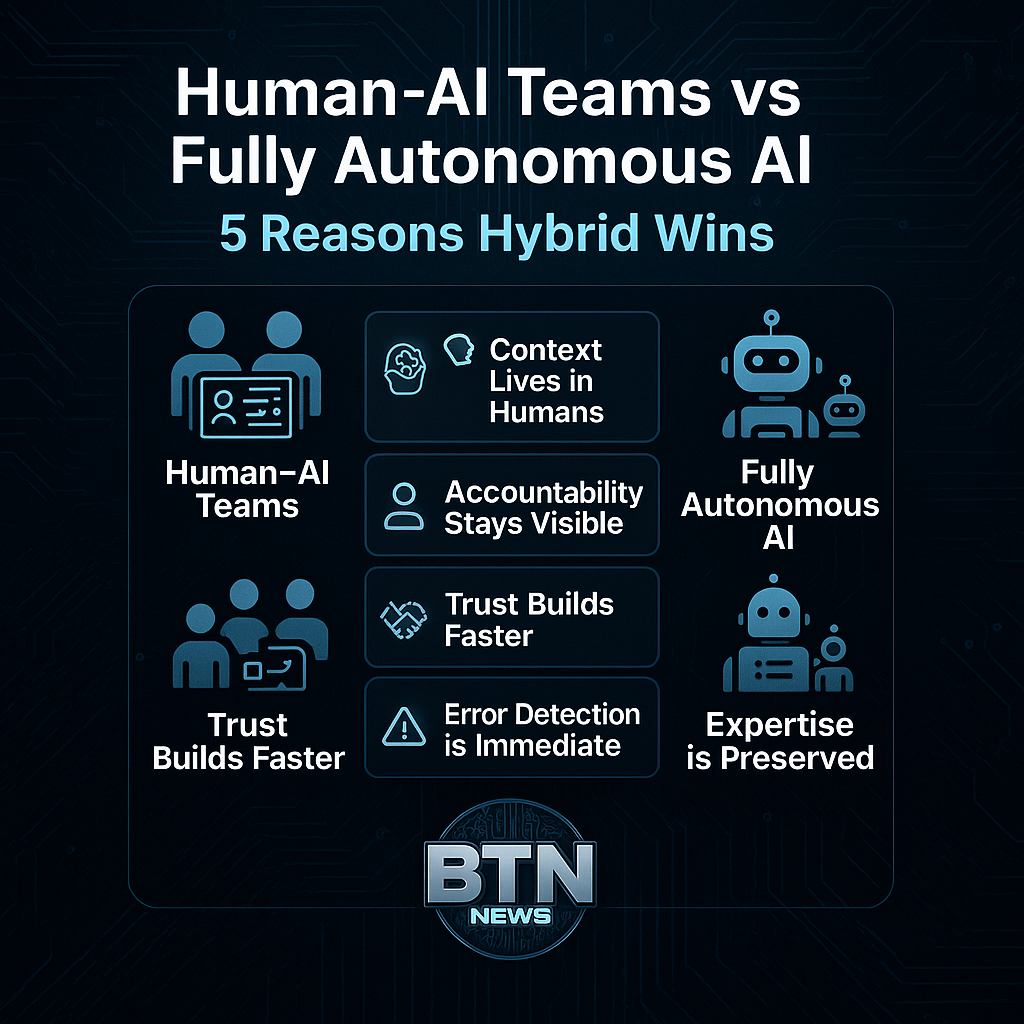

Why Human AI Teams Usually Win In The Real World

Evidence from early deployments is surprisingly consistent. When researchers at MIT studied generative AI in customer support, they found that average productivity rose, but the biggest gains came from less experienced workers who suddenly performed much closer to top performers once AI helped them search, summarize, and draft responses. This trend is backed by recent economic analysis showing that the highest value today often comes from AI systems that augment people rather than replace them entirely, especially in customer facing and knowledge intensive roles.

The pattern makes intuitive sense. Here are the 5 primary reasons why human AI teams vs fully autonomous AI is the winning strategy for modern enterprises:

- Context still lives in humans.

Processes look simple in a slide deck. On the ground, they are held together by tacit knowledge. A salesperson knows which client is quietly looking at competitors. A warehouse supervisor knows which supplier always slips before holidays. Fully autonomous AI can only see what is in the data. Human AI teams bring that lived context into the loop. - Accountability stays visible.

When a human agent uses AI to respond to a customer, there is still someone who can be named in a complaint or a review. If something goes wrong, the company can examine prompts, policies, and training data. With end to end autonomous agents, decision making moves into a technical and contractual fog that is much harder for regulators or courts to penetrate. - Trust builds faster when AI is a partner, not a rival.

Workers are more willing to adopt tools that make them better at their jobs than tools that feel designed to erase those jobs. Adoption and learning curves matter more than theoretical peak performance. A modest AI copilot that thousands of employees trust is worth more than an elegant autonomous system that nobody wants to use. - Error detection is immediate.

In a hybrid model, the human acts as a real time filter for hallucinations or logic errors. Fully autonomous systems can “drift” for weeks, making thousands of small, incorrect decisions before a human ever audits the output. Human AI teams catch these glitches before they reach the customer. - Institutional expertise is preserved.

Over reliance on autonomous systems for judgment heavy work erodes internal expertise. When models make all the complex calls, the organization gradually forgets how to reason about those calls. Human AI teams ensure that the next generation of leaders still understands the core mechanics of the business.

Human AI Teams vs Fully Autonomous AI In Day To Day Operations

Inside real businesses, human AI teams vs fully autonomous AI does not play out as an abstract “human versus machine” battle. It shows up by function.

Human AI teams are proving especially effective in:

- Customer service.

Agents use AI to suggest answers, pull policy text, and summarize long conversations. Humans still decide tone, exceptions, and escalations. - Sales and marketing.

AI drafts outreach, segments audiences, and proposes offers. People decide which campaigns are on brand, inclusive, and politically acceptable. - Software development.

AI suggests code, tests, and refactors. Engineers still own architecture, security, and what actually gets deployed.

Fully autonomous AI is gaining ground in narrower, more standardized contexts:

- Back office workflows.

Matching invoices, updating records, resetting passwords, or routing tickets inside well defined systems. - Industrial control.

Predictive maintenance and dynamic scheduling where the actions and acceptable risks are clearly bounded.

In practice, many organizations start with human AI teams and then carve out small, stable subflows for full automation once they understand the edge cases. That gradualism is a form of risk management, not a lack of ambition. For mid size companies in particular, careful staging and change management can make or break an AI rollout, which is why detailed, step by step deployment playbooks are becoming essential reading for operators and CIOs.

Democratic Norms, Bias, And Who Gets To Decide

Beneath the operational question of human AI teams vs fully autonomous AI sits a democratic one: who decides how much autonomy is acceptable when systems make decisions that affect citizens’ lives.

When AI sits beside a case worker, loan officer, or HR manager, there is at least a visible human decision maker. There is someone who can be asked to explain an outcome and who can be challenged if that outcome looks biased or arbitrary. That aligns with basic democratic norms. Decisions that affect people’s livelihoods or rights should be explainable and appealable.

By contrast, fully autonomous AI in hiring screens, credit scoring, or risk assessment creates serious governance problems. If a model quietly denies you a loan, flags you as a risky job applicant, or scores your “likelihood to churn,” who can you talk to. How do you appeal. Which regulator is able to audit the model, its data, and its downstream uses in a meaningful way.

From a progressive perspective, three guardrails matter here:

- Meaningful human review for high stakes decisions.

Not a rubber stamp, but a real opportunity to override the model based on new evidence or ethical concerns. - Right to explanation and contestation.

Individuals should be able to understand the basis of an automated decision and challenge it through an accessible process. - Institutional oversight.

Public bodies need authority and technical capacity to inspect models and data, particularly in public services, employment, and finance.

The Productivity Temptation And Hidden Costs Of Full Autonomy

The economic case for fully autonomous AI is seductive. Once you have enough clean data, the argument goes, let agents run the workflow end to end. Cut labor costs. Standardize processes. Scale without hiring.

In narrow contexts, this works. Automated agents can reconcile transactions, monitor logs, or move data between systems at speeds humans will never match. For commoditized tasks, that level of automation will likely become the baseline.

Hidden costs appear when leaders try to extend that logic to messier, judgment heavy work:

- Model drift and silent failures.

In human AI teams, frontline workers notice when suggestions start to “feel wrong” and raise red flags. In fully autonomous systems, subtle harm can accumulate for weeks before anyone looks closely. - Ethical and reputational exposure.

A misrouted support ticket is annoying. An autonomous system that cuts off benefits, mislabels communities as risky, or denies housing applications can quickly turn into a legal crisis and a social media firestorm.

Boards and executives who care about long term resilience need to factor these institutional risks alongside the pure cost savings from automation.

So Which Works Better For Real Businesses?

Across most current evidence, human AI teams vs fully autonomous AI is not an even match. For the majority of real world businesses, especially those dealing directly with customers or citizens, hybrid models deliver:

- Broader and faster adoption across the workforce

- Strong performance in both routine and ambiguous tasks

- Better alignment with democratic norms, legal standards, and public expectations

Fully autonomous AI has a clear and growing role in narrow, standardized, and auditable workflows. It will keep expanding there. But the fantasy of a near term “agent swarm” quietly running entire organizations with minimal human involvement is both technically optimistic and politically risky.

If we want institutions that are efficient, fair, and ultimately legitimate in democratic societies, the center of gravity should be human AI teams. People choose goals, values, and constraints. AI handles scale, pattern recognition, and recall. Together, they create systems where accountability remains visible enough that when something breaks, we can see it, debate it, and fix it in public.