Table of Contents

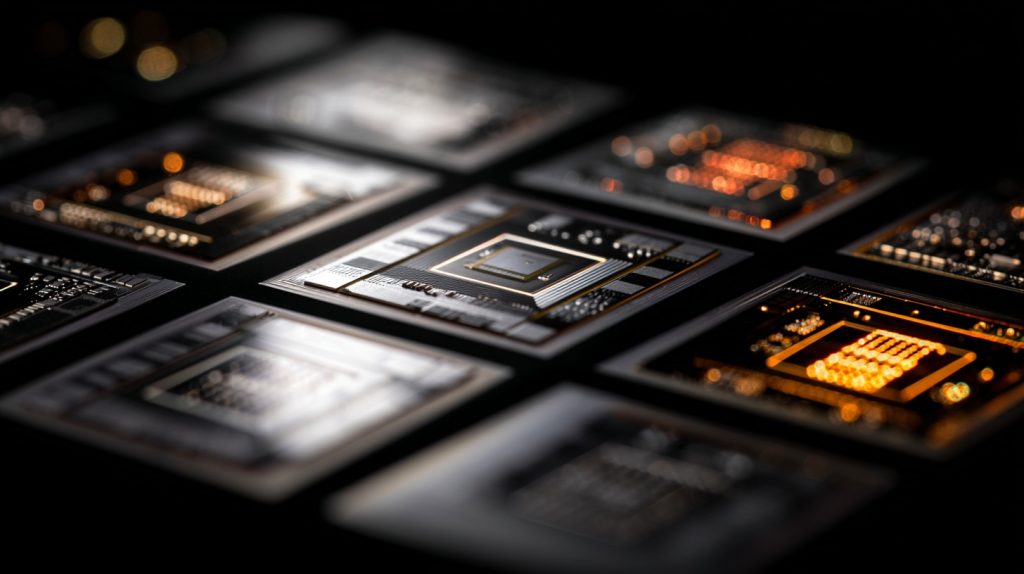

OpenAI Broadcom custom AI chips are coming, and the scale is staggering. The company behind ChatGPT announced a partnership with semiconductor giant Broadcom to design and deploy 10 gigawatts of custom AI chips over the next four years, a power draw equivalent to running a large city. That’s not a typo. We’re talking about computational infrastructure that would light up multiple Detroits, all to fuel OpenAI’s trillion-dollar infrastructure ambitions.

The partnership, unveiled Monday, represents more than just another corporate handshake in Silicon Valley’s endless game of musical chairs. It’s a signal that the AI arms race has entered a new phase, one where the winners will be determined not by who writes the cleverest algorithms, but by who can secure the physical infrastructure to run them at planetary scale.

The Infrastructure Play That Changes Everything

Here’s what makes this deal fascinating: OpenAI isn’t just buying chips off the shelf anymore. The company will design its own AI accelerators, with Broadcom handling the development and deployment. It’s a vertical integration strategy that echoes what Google did years ago with its Tensor Processing Units, and what Amazon has pursued with its custom silicon efforts.

The move sidesteps, at least partially, OpenAI‘s current dependence on Nvidia’s GPUs, which have become both indispensable and impossibly scarce as AI demand has exploded. Nvidia’s H100 and H200 chips remain the gold standard for training large language models, but waiting lists stretch months and prices remain astronomical. By designing custom inference chips (optimized for running AI models rather than training them), OpenAI is betting it can achieve better performance per watt and better economics at the scales it operates.

Broadcom’s stock surged nearly 10% on the news, and for good reason. The company has quietly positioned itself as the arms dealer to AI’s warring nations. While Nvidia dominates training chips, Broadcom specializes in custom silicon and networking components, the unglamorous plumbing that actually moves data around massive data centers. In AI infrastructure, networking bottlenecks can matter as much as raw compute power.

When Your Power Bill Rivals a Small Nation

The 10-gigawatt figure deserves a moment of contemplation. That’s 10 billion watts of continuous power consumption. To put it in perspective, the average U.S. household uses about 1.2 kilowatts. OpenAI’s new chip deployment, when fully operational, would consume power equivalent to more than 8 million homes running simultaneously.

This energy appetite raises questions that extend far beyond OpenAI’s quarterly earnings. We’re watching the construction of digital infrastructure that rivals physical utilities in scale. The environmental implications are staggering. Even if these data centers run on renewable energy (a big if), that’s renewable capacity that can’t power homes, schools, or hospitals.

The timing is particularly notable given the broader conversation about AI sustainability. Google recently announced a $15 billion AI investment in India, part of a global race to plant AI infrastructure flags in emerging markets. Every major tech company is making similar bets, and the cumulative energy demands are starting to strain grid operators from Texas to Ireland.

The Stargate Project and America’s Industrial Policy

This Broadcom deal slots into OpenAI’s larger Stargate initiative, a collaboration with Oracle and SoftBank to build AI data centers across the United States. The first phase alone calls for that same 10-gigawatt deployment, with custom inference chips and racks scheduled to begin rolling out in late 2026.

What we’re witnessing is effectively industrial policy by proxy. The federal government isn’t building this infrastructure directly, but it’s certainly cheering from the sidelines. The Biden administration has made AI leadership a priority, framing it as essential to national security and economic competitiveness. The CHIPS Act, which allocated billions to semiconductor manufacturing, created the policy environment that makes deals like this possible.

There’s a democratic governance question lurking here that few are asking: Who decides how we allocate massive amounts of computational resources and energy? These decisions shape what AI applications get built, who benefits from them, and who bears the costs. Right now, those choices are being made in C-suites and venture capital boardrooms, not through any democratic process.

The Chip Sovereignty Game

OpenAI’s push for custom silicon reflects a broader anxiety about supply chain vulnerability. The last few years have taught tech companies that depending on any single supplier, no matter how dominant, creates strategic risk. Nvidia’s near-monopoly on AI training chips has given it tremendous pricing power. Custom chips offer a way out of that dependency.

But there’s a deeper pattern here. The same consolidation dynamics that created Nvidia’s dominance are now playing out one level up the stack. OpenAI is trying to become to AI applications what Nvidia is to AI chips: the unavoidable platform that everyone else has to build on top of. Vertical integration all the way down.

This matters because the companies that control the infrastructure layer will effectively set the rules for the entire AI ecosystem. They’ll decide what gets compute priority, who pays what prices, and which applications are economically viable to run at scale. That’s an enormous amount of private power concentrated in very few hands.

What Comes After the Building Spree

The announcement carefully avoids discussing actual costs, but industry analysts suggest deals of this magnitude likely run into tens of billions of dollars. OpenAI has already committed to infrastructure spending that tops $1 trillion across various partnerships. For a company that only recently started generating meaningful revenue, that’s a staggering bet.

It’s a bet that assumes AI demand will continue growing at exponential rates indefinitely. Maybe it will. But it’s worth noting that every previous computing revolution, from mainframes to PCs to smartphones, eventually hit saturation points where the infrastructure build-out overshot actual demand.

The custom chip deployment timeline is telling: late 2026 for initial rollout, with the full 10 gigawatts coming online over four years. That’s an eternity in AI time. By 2029, we might be working with fundamentally different model architectures that make today’s infrastructure choices look shortsighted. Or we might discover that smaller, more efficient models can do most of what users actually need, making these massive chip farms partially obsolete before they’re fully built.

The Real Infrastructure That Matters

Lost in all the gigawatt-talk is a simpler question: What is all this infrastructure actually for? The stated goal is to make AI more accessible and useful. But accessibility has never really been about chip supply. It’s been about who controls the platforms, who can afford to use them at scale, and whose needs get prioritized in product development.

OpenAI’s infrastructure buildout doesn’t make AI more democratic. It makes OpenAI more powerful. The company is constructing a moat made of silicon and electricity, one that will be nearly impossible for competitors to cross. That might be smart business strategy, but it’s unclear whether it serves the public interest.

The Broadcom partnership is impressive as a feat of engineering ambition and corporate dealmaking. It’s also a reminder that the AI future being built right now is one where a handful of companies control infrastructure that rivals nation-states in scale and consequence. We’re watching the construction of digital empires in real time, powered by enough electricity to light up cities and built on enough capital to fund small countries.

The question isn’t whether this infrastructure will get built. Clearly it will. The question is what kind of future it’s building us toward, and whether anyone outside a few boardrooms gets a vote in the matter. So far, the answer seems to be no.